Contents

Personal background

The idea of controlling technology by telling it what to do has been compelling for a long time. In fact, when I was part of a “voice portal” startup in 1999-2001 (Quack.com, which rolled into AOLbyPhone 2001-2004 or so), there was a joking acknowledgement that the tech press announces “speech recognition is ready for wide use” about every ten years, like clockwork. Our startup launched around the third such crest of press optimism. And like movies on similar topics that release the same summer, there was a new crop of voice portal startups at the time (e.g., TellMe and BeVocal). Like the browser wars of a few years earlier between Netscape and IE, in which they’d pull pranks like sneaking their logo statue into the competitor’s office yard, we spiked TellMe car antennas with rubber ducks in their parking lot. Those were crazy, fun days when the corner of University and Bryant in Palo Alto seemed like the center of the universe, long hours in our little office felt like a voyage on a Generation Ship, and pet food was bought online. And a little more than a decade later, Apple has bought Siri to do something similar, and Google and Microsoft have followed.

The idea that led to our startup was wanting to help people compare prices with other stores while viewing products at a brick-and-mortar store. Mobile phones then had poor cameras and browsers, so the most feasible interaction method was to verbally select a product type, brand, and model by first calling an Interactive Voice Response (IVR) service. But a web startup needs more traffic than once-a-week shopping, so other services were added such as movie showtimes, sports scores, stock quotes, news headlines, email reading and composing (via audio recording), and even restaurant reviews. This was before VoiceXML reached v1.0 and we used a proprietary xml variant developed in-house alongside our Microsoft C++-based servers. We were the first voice portal to launch in the US, and that was with all services except the price comparison feature that was our initial motivation. It hasn’t reappeared on any voice portal since, that I know of.

As any developer knows, building on standards often provides many advantages. Once VXML 1.0 appeared, I wanted to see if we could migrate to it, so I bought a Macintosh G4 with OS X v1 when the Apple store first opened in Palo Alto, and used the Java “jar” wrappers for its speech recognition and generation features to prototype a vxml “browser”. When it supported 40% of the vxml spec, I shared it with our startup, recently bought by AOL, but they passed. I stopped work on it and released it as open-source through the Mozilla Foundation (see vbrowse.mozdev.org).

More than a decade later, markup-based solutions like vxml still seem like the most productive way of creating speech-driven applications (compared to, say, creating a Windows-only application using Dragon NaturallySpeaking).

Application design

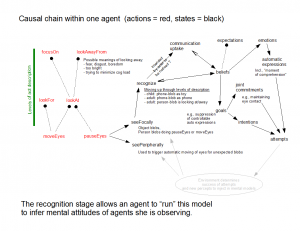

State-of-the-art web applications tend to adopt the Model-View-Control design pattern, where the model is a JSON finite-state machine representation of all the states (e.g. ViewingInbox, ComposingMessage) supported, and JavaScript is used as controller to create DOM/HTML views, handle user actions, and manage data transfers with the server. This is also the pattern of newer W3C specs like SCXML that aim to support “multi-modal” interactions such as requesting a mapped location on one’s mobile phone by speaking the location (i.e., speech is one mode) and having the map appear in the browser (i.e., browser items are another mode). As “pervasive computing” develops and is able to escape the confines of mobile phones and laptops, additional modes needing support are likely to be, first, recognizing that the user is pointing to something and resolving what the referent is, and secondly, tracking the gaze of the user and recognizing what it’s fixated upon, as a kind of augmented reality hover gesture. Implementing and integrating such modes is part of my interest in the larger topic of intention perception; if you are interested in how these modes fit into a larger theoretical context, I highly recommend the entry on Theory of Meaning (and Reference) in the Stanford Encyclopdia of Philosophy, and Herb Clark’s book “Using Language“.

Vxml is up to v3.0 now, and it might support integration with these non-speech modes. But vxml 2.0 and 2.1 are supported more widely, and creating applications with them that follow the design pattern above requires a bit of thinking. The remainder of this article will share my thoughts and discoveries about how to do that with an excellent freemium platform, Voxeo.com

Tips on Using Vxml 2.1

Before attempting to create a vxml application, I strongly recommend getting a book on the topic or reading the specs online. But as a quick overview, think of conversations as pairs of turns in which one person already has in mind how the other person might respond to what he is about to say, he then says it, and usually allows the other person to interrupt, and as long as the other person says something and it’s intelligible, the speaker will respond with another turn. Under this description, the speaker’s turn gets most of the attention, but the respondent’s turn usually determines what happens next. Each such pair can be conceived of as a state in a finite-state machine, where all the speaker’s reactions to the respondent correspond to transitions out of those states.

To implement such a set of states in vxml2.0 or 2.1, one can create a single text document (aka “Single Page Application (SPA)“) with this as a start,

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE vxml SYSTEM "http://www.w3.org/TR/voicexml21/vxml.dtd">

<vxml version="2.1">

</vxml>

and then for each state, insert a variant of the following between the ‘vxml’ tags:

<form id="fYourNameForTheState">

</form>

To implement each state, add a variant of the following within each ‘form’ element,

<field name="ffYourNameForTheState">

<grammar mode="voice" xml:lang="en-US" root="gYourNameForTheState" tag-format="semantics/1.0">

<rule id="gYourNameForTheState">

...All the things the speaker might expect the respondent to say that are on-task...

</rule>

</grammar>

<prompt timeout="10s">...The primary thing the speaker wants to tell the respondent in this state, perhaps a question...</prompt>

<noinput>

<prompt>...What to say if the prompt finishes and the respondent is silent all through the timeout duration...</prompt>

</noinput>

<nomatch>

<prompt>...What to say as soon as any mismatch is detected between what the respondent is saying and what the speaker was expecting in the grammar; "I didn't get that" is a good choice...</prompt>

</nomatch>

<filled>

<if cond="ffYourNameForTheState.result && (ffYourNameForTheState.result == 'stop')">

<goto next="#fWaitForInstruction"/>

<elseif cond="ffYourNameForTheState.result && (ffYourNameForTheState.result == 'shutdown')" />

<goto next="#fGetConfirmationOfShutdown"/>

<else />

<assign name="_oInstructionHeard" expr="ffYourNameForTheState"/> <!-- Assumes _oInstructionHeard was declared outside this form in a 'var' or 'script' -->

<goto next="#fGetConfirmationOfInstruction"/>

</if>

</filled>

</field>

We’ll discuss grammars in more depth below, and the rest of the template is largely self-explanatory. But a few minor points:

- If you need to recognize only something basic like yes-or-no or digits in a form, then you can remove the ‘grammar’ element and instead add one of these to the ‘field’ element:

type="boolean"type="digits"type="number"

- Grammars can appear outside ‘field’ as a child of ‘form’, but then they are active in all fields of the form. There are cases in which doing so is good design, but it’s not the usual case.

- The only element that “needs” a timeout for the respondent being silent is ‘noinput’; yet, the attribute is required to be part of ‘prompt’ instead.

- ‘goto’s can go to other fields in the same form, or different forms, but not to a specific field of another form.

I’ve made the ‘filled’ part less generalized than the other parts to illustrate a few points:

- The contents of the ‘filled’ element is where you define all of the logic about what to do in response to what the respondent has said.

- Although I’ve indented if-elseif-else to highlight their usual semantic relation to each other, you can see that actually ‘if’ contains the other two, and that ‘elseif’ and ‘else’ don’t actually contain their then-parts (which is somewhat contrary to the spirit of XML).

- The field name is treated as if it contains the result of speech recognition (because it does), and it does so as a JavaScript object variable that has named properties.

- The field variable is lexically scoped to the containing form, so if you want to access the results of speech recognition in another form (perhaps after following a ‘goto’), then you first must have a JavaScript variable whose scope is outside either of the forms, and assign it the object held by the field variable.

- A boolean AND in a condition must be written as

&& to avoid confusing the XML parser. (You might want to try wrapping the condition as CDATA if this really bugs you.)

- Form id’s can be used like html anchors, so a local url for referencing a form starts with url fragment identifier ‘#’ followed by the form’s id.

Note that it’s not necessary to start form id’s with “f”, or fields with “ff”, or grammars with “g”, nor is it necessary to repeat names across them like I do here. But I find that simplifying this way helps keep the application from seeming over-complicated.

Creating grammars

To implement the grammar content indicated above by placeholder text, “…All the things the speaker might expect the respondent to say that are on-task…,” one provides a list ‘one-of’ and ‘item’ elements. ‘one-of’ is used to indicate that exactly one of its child items must be recognized. ‘item’ has a ‘repeats’ attribute that takes such values as “0-1” (i.e., can occur zero or one times), “0-” (i.e., can occur zero or more times), “1-” (i.e., can occur one or more times), “7-10” (i.e., can occur 7 to 10 times), and so on. ‘item’ takes one or more children which can be any permutation of ‘item’ and ‘one-of’ elements, which can have their own children, and so on. The children of a ‘rule’ or ‘item’ element are implicitly treated as an ordered sequence, so all the child elements must be recognized for the parent to be recognized. (This formalism might remind you of Backus-Naur Form (BNF) for describing a context-free grammar (CFG). If you need a grammar more expressive than a CFG, you’ll have to impose the additional constraints in post-processing that follows speech recognition.)

If the contents of the grammar rule take up more than about five lines, it’s good practice like in other coding languages to modularize that content into an external file. Each such grammar module is declared within an inline ‘item’ like this,

<grammar mode="voice" xml:lang="en-US" root="gGetCommand" tag-format="semantics/1.0">

<rule id="gGetCommand">

<one-of>

<item>

<ruleref uri="myCommandLanguage.srgs.xml#SingleCommand" type="application/grammar-xml"/>

</item>

<item>

<ruleref uri="myCommandStop.srgs.xml#Stop" type="application/grammar-xml"/>

</item>

</one-of>

</rule>

</grammar>

and the external grammar file should have this form:

<?xml version= "1.0" encoding="UTF-8"?>

<!DOCTYPE grammar PUBLIC "-//W3C//DTD GRAMMAR 1.0//EN"

"http://www.w3.org/TR/speech-grammar/grammar.dtd">

<grammar version="1.0" xmlns="http://www.w3.org/2001/06/grammar" xml:lang="en-US" tag-format="semantics/1.0" root="SingleCommand" >

<rule id="SingleCommand" scope="public">

...A sequence of 'one-of' and 'item' elements describing single commands you want to support...

</rule>

<rule id="SubgrammarOfSingleCommand" scope="public">

...Details about a particular command that would take too much space if placed inside the SingleCommand rule...

</rule>

</grammar>

Defining the Recognition Result

Human languages usually allow any intended meaning to be phrased in several ways, so useful speech apps need to accommodate this by providing as many expected paraphrases as seem likely to be used. So, a grammar often has several ‘one-of’s to accommodate paraphrases. A naive approach for a speech app would be to provide such paraphrases in the grammar, and take recognition results in their default format of a single string, and then try to re-parse that string with JavaScript case-switch-logic similar to the ‘one-of’s in the markup — a duplication of work (ugh) with the attendant risk that the two will eventually fall out of sync (UGH!). What would be much preferred would be to retain the parse structure of what’s recognized and return that instead of a (flat) string; in fact, this is just what the “semantic interpretation” capability of vxml grammars offers. To make use of this capability, a few things are needed (these may be Voxeo-specific):

- The ‘grammar’ elements in both the vxml file and the external grammar file(s) must use attributes

tag-format="semantics/1.0" plus root="yourGrammarsRootRuleId"

- ‘tag’ elements must be placed in the grammars (details on how below), and they must assume there is a JSON object variable named ‘out’ to which you must assign properties and property-values. If instead you assign a string to ‘out’ anywhere in your grammar, then recognition results will revert to flat-string format.

- If using Voxeo, ‘ruleref’ elements that refer to an external grammar must use attribute ‘type=”application/grammar-xml”‘, which doesn’t match the type suggested by the vxml2.0 spec, “application/srgs+xml”, http://www.w3.org/TR/speech-grammar/#S2.2.2

To use ‘tag’ elements for paraphrases, one can do this,

<rule id="Stop" scope="public">

<one-of>

<item>stop</item>

<item>quit</item>

</one-of>

<tag>out.result = 'stop'</tag>

</rule>

in which the ‘result’ property was chosen by me, but could have been any legal JSON property name. The only real constraint on the choice of property name is that it make self-documenting sense to you when you refer to it elsewhere to retrieve its value.

‘tag’ elements can also be children of ‘item’s, which makes them a powerful tool for structuring the recognition result. For example, a grammar rule can be configured to create a JSON object:

<rule id="ParameterizedAction" scope="public">

<one-of>

<item>

<one-of>

<item>drill</item>

<item>bore</item>

</one-of>

<ruleref uri="#DrillSpec"/>

<tag>

out.action = 'drill';

out.measure = rules.latest().measure;

out.units = rules.latest().units;

</tag>

</item>

...

</rule>

In this example, we rely on knowing that the “DrillSpec” rule returns a JSON object having “measure” and “units” properties, and we use those to create a JSON object that has those properties plus an “action” property.

‘tag’ elements can also be used to create a JSON array:

<rule id="ActionExpr" scope="public">

<tag>

out.steps = [];

function addStep(satisfiedParameterizedActionGrammarRule) {

var step = {};

step.action = satisfiedParameterizedActionGrammarRule.action;

step.measure = satisfiedParameterizedActionGrammarRule.measure;

step.units = satisfiedParameterizedActionGrammarRule.units;

out.steps.push(step);

}

</tag>

<item>

<ruleref uri="#ParameterizedAction"/>

<!-- This use of rules.latest() should work according to http://www.w3.org/TR/semantic-interpretation/#SI5 -->

<tag>addStep(rules.latest())</tag>

</item>

<item repeat="0-">

<item>

and

<item repeat="0-1">then</item>

</item>

<ruleref uri="#ParameterizedAction"/>

<tag>addStep(rules.latest())</tag>

</item>

</rule>

These object- and array-construction techniques can be used in other rules that you reference as sub-grammars of these, allowing you to create a JSON object that captures the complete logical parse structure of what is recognized by the grammar.

By the way, if you want to use built-in support for recognizing yes-or-no, numbers, dates, etc as part of a custom grammar, then you’ll need to use a ‘ruleref’ like this,

<rule id="DepthSpec" scope="public">

<item>

<ruleref uri="#builtinNumber"/>

<tag>out.measure = rules.builtinNumber</tag>

</item>

</rule>

<rule id="builtinNumber">

<item>

<ruleref uri="builtin:grammar/number"/>

</item>

</rule>

URI’s for other types can be inferred from the “grammar src” examples at http://help.voxeo.com/go/help/xml.vxml.grxmlgram.builtin (although these might be specific to the Voxeo vxml platform).

If you follow this grammar-writing approach, then you can access the JSON-structured parse result by reading property-value’s from the field variable containing the grammar (e.g., “ffYourNameForTheState” above), just as if it were the “out” variable of your root grammar rule that you’ve been assigning to. These values can be used in ‘filled’ elements either to guide if-then-else conditions, or be sent to a remote server as we’ll see in the next major section, “Dynamic prompts and Web queries”.

Managing ambiguity

As a side note, if you’re an ambiguity nerd like me, you’ll probably be interested to know that Vxml 2.0 doesn’t specify how homophones or syntactic ambiguity must be handled. But Voxeo provides a way to get multiple candidate parses.

Dynamic prompts and Web queries

So far, we can simulate one side of a canned conversation via a network of expected conversational states. It’s similar to a Choose-Your-Own-Adventure book in that it allows variety in which branches are followed, but it’s “canned” because all the prompts are static. But often we need dynamic prompts, especially when answering a user question via a web query. JavaScript can be used to provide such dynamic content by placing a ‘value’ element as a child of a ‘prompt’ element, and placing the script as the value of ‘value’s ‘expr’ attribute, like this:

<assign name="firstNumberGiven" expr="100"/> <!-- Simulate getting a number spoken by the user -->

<assign name="secondNumberGiven" expr="2"/> <!-- Simulate getting a number spoken by the user -->

<prompt>The sum of <value expr="firstNumberGiven"/> and <value expr="secondNumberGiven"/> is <value expr="firstNumberGiven + secondNumberGiven" /> </prompt>

The script can access any variable or function in the lexical scope of the ‘value’ element; that is, any variable declared in a containing element (or its descendants that appear earlier). Also notice that, by default, adjacent digits from a ‘value’ element are read as a single number (e.g., “one hundred and two”) rather than as digits (e.g., “one zero two”). That’s convenient, because one can’t embed a ‘say-as’ element in the ‘expr’ result, although one can force pronunciation as digits by inserting a space between each digit (e.g., “1 0 2”) perhaps by writing a JavaScript function (see http://help.voxeo.com/go/help/xml.vxml.tutorials.java); otherwise, if the default were to pronounce as digits, then forcing pronunciation as a single number would require a much more complicated function.

I’ve said little to nothing about interaction design in speech applications, although it’s very important to get right, as anyone who’s become frustrated while using a speech- or touchtone-based interface knows well. But one principle of interaction design that I will emphasize is that user commands should usually be confirmed, especially if they will change the state of the world and might be difficult to undo. When grammars are configured to return flat-string results, prompting for confirmation is easy to configure like this:

<prompt>I think you said <value expr="recResult"/> Is that correct? </prompt>

But when a grammar is configured to return JSON-structured results, the ‘value’ element above might be read as just “object object” (the result of JavaScript’s default stringify method for JSON objects, at least in Voxeo’s vxml interpreter). I believe the best solution is to write a JavaScript function (in an external file referenced with a ‘script’ element near the top of the vxml file) that is tailored to construct a string meaningful to your users from your grammar’s JSON structure, then wrap the “recResult” variable (or whatever you name it) in a call to that function. If there is any need to nudge users toward using terms that are easier for your grammar to recognize, then this custom stringify function is an opportunity to paraphrase their commands back to them using your preferred terms.

Now we’re ready to talk about sending JSON-structured recognition results to remote servers, which is the most exciting feature of vxml 2.1 for me, because it’s half of what we need to make vxml documents able to leverage the same RESTful web APIs that dhtml documents can (the other half, being able to digest the server’s response, will be discussed soon; “dhtml” === “Dynamic HTML”, which is a combination of html and JavaScript fortunate enough to find itself in a browser that has JavaScript enabled). Like html forms, vxml provides a way for its forms to submit to a remote server. And also like html, the response must be formatted in the markup language that was used to make the submission, because the response will be used to replace the document containing the requesting form. Html developers realized that their apps could be more responsive if less content needed to travel to and from the remote server, and that if they instead requested just the gist of what they needed, and the response encoded that gist in a markup-agnostic format like XML or JSON, then JavaScript could be used in their browser-based client to manipulate the DOM of the current document and that might usually be faster than requesting an entirely new document (even if most of its resources could be externalized into JavaScript and CSS files that can be cached). Because these markup-agnostic APIs are becoming widely available, they present an opportunity for non-html client markup languages like vxml to leverage them. Vxml developers created a way to leverage these APIs by adding a ‘data’ element alternative to vxml form submission in the vxml 2.1 spec. Here’s an example:

<var name="sInstructionHeard" expr="JSON.stringify(_oInstructionHeard)"/>

<data method="post"

srcexpr="_sDataElementDestinationUrl + '/executeInstructionList'"

enctype="application/x-www-form-urlencoded"

namelist="sInstructionHeard"

fetchhint="safe"

name="oRemoteResponse"

ecmaxmltype="e4x" />

The ‘data’ element isn’t as user-friendly as it might be. For example, one can’t just put the JSON-structured recognition result in it and expect it to be transferred properly; instead, one must first JSON.stringify() it (this method is native to most dhtml browsers circa 2014 and to Voxeo’s vxml interpreter). And the ‘data’ element requires that even POST bodies be url-encoded, so the remote server must decode using something like this (assuming you’re using a NodeJs server):

sBody = decodeURIComponent(sBody.replace(/\+/g, ' '));

sBody = sBody.replace('sInstructionToEvaluate=',''); //Strip-off query parameter name to leave bare value

sBody = (sBody ? JSON.parse(sBody) : sBody);

What the remote server needs to do for its response is easier:

oResponse.writeHead(200, {'Content-Type': 'text/xml'});

oResponse.end('<result><summaryCode>stubbedSuccess</summaryCode><details>detailsAsString</details></result>');

If the server is reachable and generates a response like this, then the variable above that I named “oRemoteResponse” will be JSON-structured and have a ‘result’ property, which itself will have ‘summaryCode’ and ‘details’ properties whose values, in this case, are string-formatted. You have the freedom to use any valid XML element name — which is also a valid JSON property name — in place of my choice of ‘result’. The conversion from the remote server’s XML formatted response to this JSON structure is done implicitly by the vxml interpreter due to the ecmaxmltype="e4x" attribute. (The vxml 2.1 interpreter cannot process a JSON-formatted response as dhtml browsers can.) These JSON properties from the remote server can be used to control the flow of conversation among the ‘form’s in the same way we used JSON properties from “semantic” speech recognition earlier. Coolness!

A few final comments about ‘data’ elements:

- To validate the xml syntax of your app, you probably want to upload it to the W3C xml validator; however, the

ecmaxmltype="e4x" attribute is apparently not part of the vxml 2.1 DTD, which the validator finds at the top of your file if you’re following my template above, and so you will get a validation error that you’ll have to assume is spurious and ignorable.

- My app uses a few ‘data’ elements to send different kinds of requests, so to keep the url of the remote server in-sync across those, I have a ‘var’ element before all my forms in which I define the

_sDataElementDestinationUrl url value.

fetchhint="safe" disables pre-fetching, which isn’t useful for dynamic content like the JSON responses we’re talking about- If you want to enable caching, which doesn’t make sense for dynamic JSON content like we’ve been talking about but would be reasonable for static content, you’d do that via your remote server’s response headers.

- If the remote server isn’t reachable, the ‘data’ element will throw an ‘error.badfetch’ that can be caught with a ‘catch’ element to play a prompt or log error details, but unfortunately this error is required by the spec to be “fatal” which appears to mean the app must exit (in vxml terms, I believe it means the form-interpretation algorithm must exit). That’s a more severe reaction than in dhtml which allows DOM manipulation and further http requests to continue indefinitely. Requiring such errors to be fatal blocks such potential apps as a voice-driven html browser that reads html content, because it could not recover from the first request that fails. But maybe I’m interpreting “fatal” wrong; Voxeo’s vxml interpreter seems to allow interaction to continue indefinitely if this error is caught with a ‘catch’ element that precedes a ‘form’.

- If the remote server is reachable but must return a non-200 response code, the ‘data’ element will throw ‘error.badfetch.DDD’ where DDD is the response code. This error is also “fatal”.

At this point, we’ve covered all that I think is core to authoring a speech application using vxml 2.1. For more details, the vxml 2.0 spec and its 2.1 update are the authoritative references. Voxeo’s help pages are also quite useful.

Up next: Test-driven development of speech applications, and Hosting a speech app using Voxeo.